TEACHING NAO ROBOT TO DO THE RIGHT THING

NAO robots are immensely popular tools for scientific research. Relatively inexpensive and fully programmable, their humanoid features make them perfect research subjects in disciplines as complicated and human-oriented as ethics. There is a lot of work to be done, because if you think teaching children to do the right thing is tough, just wait until you try to program your robot!

NAO robots are immensely popular tools for scientific research. Relatively inexpensive and fully programmable, their humanoid features make them perfect research subjects in disciplines as complicated and human-oriented as ethics. There is a lot of work to be done, because if you think teaching children to do the right thing is tough, just wait until you try to program your robot!

Human beings are creatures complicated by millions of years of biological evolution and must live within a complicated structure of individual and community responsibilities; little wonder then that individuals often make decisions at variance with the moral principles that regulate those communities. On the other hand, the evolution of actual humanoid robots like NAO is only a decade or two old, and whereas human evolution has been hit or miss, the worse that can be said of robot evolution is that the parts are provided by the lowest bidder.

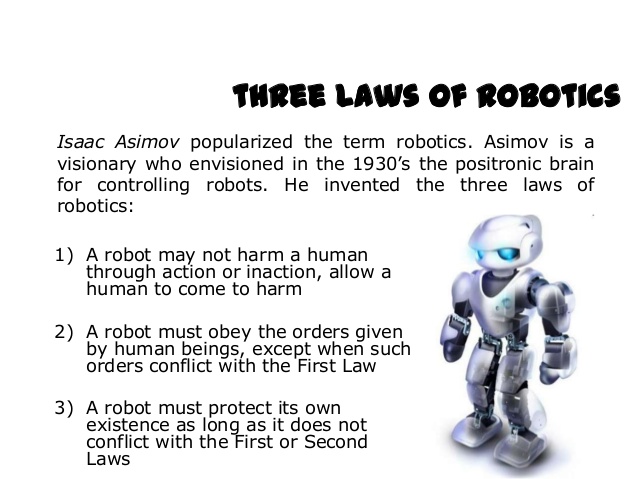

For that reason it is easy to believe that while human beings take years to learn, all that robots need to behave properly is to come off the production line programmed with the famous Three Laws of Robotics promulgated by science-fiction writer Isaac Asimov in a 1940’s short-story. But robotic scientists and ethicists interested in the science of artificial intelligence have learned in a number of studies using NAO that teaching NAO the right thing to do isn’t as easy as Asimov thought!

The robots in Asimov’s short-story Runabout were programmed with the following three rules or laws:

The three laws encapsulate rather elegantly the Judeo-Christian theme that human life is more important than property; and when necessary, property ( i.e. the robot) must be sacrificed to protect human life. What could be simpler? Perhaps nothing; but Mr. Asimov wrote before the coming of the automated automobile…

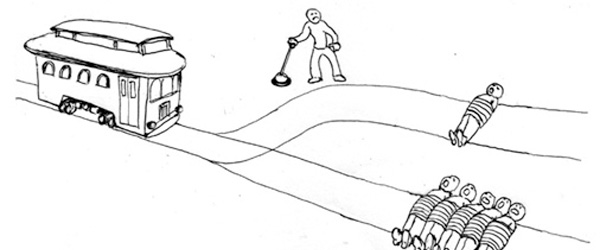

Years ago – – in the days of the trolley car – – ethicists came up with a devilishly complicated ethical dilemma called the “trolley problem:” Imagine a runaway railway trolley is about to kill five innocent people who are on the tracks. You can save them only if you pull a lever that diverts the train onto another track, where it will hit and kill an innocent bystander. What do you do? In another possible? scenario, the only way to stop the trolley is to push the bystander onto the tracks.

An exceedingly short, unscientific poll indicates that most people would reluctantly pull the lever; but no one could countenance pushing the bystander onto the tracks.

Okay, imagine ourselves in one of Google's new automated cars in a similar situation: The robot’s prime directive is to safeguard human life; there is nothing in that directive that says five lives are more worthy than one; no matter how it acts, it is going to violate the First Law. What is the robot going to do in the absence of any other programming? Probably dither with smoke pouring out of its head and sensors whirring like Robbie the robot. (And it’s hard to image little NAO pushing anybody onto the tracks!)

Then let’s program the robot to value five lives above one life; that's simple enough. But what if the one life is a pregnant woman and the five are all over 75 years of age? What if the one person is President of the United States? What the heck do we want the robot to do in those scenarios? How you feel about it probably depends on your age or your politics and the robot's response will likely depend on the age and politicsof the programmer.

Aldebaran’s NAO robot is and has been for years the most popular anthropomorphic robot in educational and scientific research. It should come as no surprise that ethicists with an interest in developing an ethical system for robots would find NAO a valuable tool. Of course little NAO robot doesn’t have the brainpower to make the right decisions in situations as complicated as the trolley problem--but then I don’t know anyone that does!